OK, I have been working on a random image selector and queue system (so you don't see the same images too often).

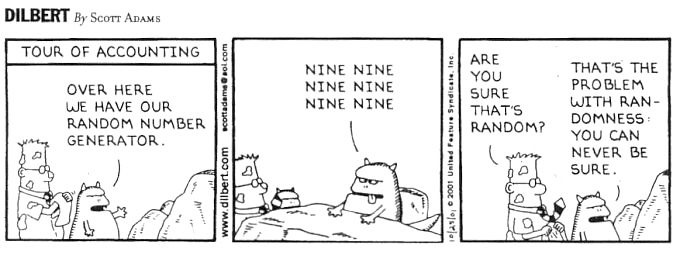

All was going swimmingly (as far as my crappy code does) until I got to the random bit. I wanted to test it, but how do you test for it? There is no Debug.Assert(i.IsRandom) (sadly) :D

So, I got my brain on it after watering it with some tea and came up with the following, I was just wondering if I could have your thoughts?

- Basically I knew the random bit was the problem, so I ripped that out to a delegate (which would then be passed to the objects constructor).

- I then created a class that pretty much performs the same logic as the live code, but remembers the value selected in a private variable.

- I then threw that delegate to the live class and tested against that:

i.e.

Debug.Assert(myObj.RndVal == RndIntTester.ValuePassed);

But I couldn't help but think, was I wasting my time? I ran that through lots of iterations to see if it fell over at any time etc.

Do you think I was wasting my time with this? Or could I have got away with:

GateKiller's answer reminded me of this:

Update to Clarify

- I should add that I basically never want to see the same result more than X number of times from a pool of Y size.

- The addition of the test container basically allowed me to see if any of the previously selected images were "randomly" selected.

- I guess technically the thing here being tested in not the RNG (since I never wrote that code) but the fact that am I expecting random results from a limited pool, and I want to track them.

There is a handy list of statistical randomness tests and related research on Wikipedia. Note that you won't know for certain that a source is truly random with most of these, you'll just have ruled out some ways in which it may be easily predictable.

There are whole books one can write about randomness and evaluating if something appears to be random, but I'll save you the pages of mathematics. In short, you can use a chi-square test as a way of determining how well an apparently "random" distribution fits what you expect.

If you're using Perl, you can use the Statistics::ChiSquare module to do the hard work for you.

However if you want to make sure that your images are evenly distributed, then you probably won't want them to be truly random. Instead, I'd suggest you take your entire list of images, shuffle that list, and then remove an item from it whenever you need a "random" image. When the list is empty, you re-build it, re-shuffle, and repeat.

This technique means that given a set of images, each individual image can't appear more than once every iteration through your list. Your images can't help but be evenly distributed.

All the best,

Paul

I agree with Adam Rosenfield. For the situation you're talking about, the only thing you can usefully test for is distribution across the range.

The situation I usually encounter is that I'm generating pseudorandom numbers with my favourite language's PRNG, and then manipulating them into the desired range. To check whether my manipulations have affected the distribution, I generate a bunch of numbers, manipulate them, and then check the distribution of the results.

To get a good test, you should generate at least a couple orders of magnitude more numbers than your range holds. The more values you use, the better the test. Obviously if you have a really large range, this won't work since you'll have to generate far too many numbers. But in your situation it should work fine.

Here's an example in Perl that illustrates what I mean:

If the spread between the min and max occurrences is small, then your distribution is good. If it's wide, then your distribution may be bad. You can also use this approach to check whether your range was covered and whether any values were missed.

Again, the more numbers you generate, the more valid the results. I tend to start small and work up to whatever my machine will handle in a reasonable amount of time, e.g. five minutes.

To get a series of non-repeating random numbers:

Don't test the randomness, test to see if the results your getting are desirable (or, rather, try to get undesirable results a few times before accepting that your results are probably going to be desirable). It will be impossible to ensure that you'll never get an undesirable result if you're testing a random output, but you can at least increase the chances that you'll notice it happening.

I would either take N pools of Y size, checking for any results that appear more than X number of times, or take one pool of N*Y size, checking every group of Y size for any result that appears more than X times (1 to Y, 2 to Y + 1, 3 to Y + 2, etc). What N is would depend on how reliable you want the test to be.

Supposing you are testing a range for randomness within integers, one way to verify this is to create a gajillion (well, maybe 10,000 or so) 'random' numbers and plot their occurrence on a histogram.

The above shows a 'relatively' normal distribution.

if it looked more skewed, such as this:

Then you can see there is less randomness. As others have mentioned, there is the issue of repetition to contend with as well.

If you were to write a binary file of say 10,000 random numbers from your generator using, say a random number from 1 to 1024 and try to compress that file using some compression (zip, gzip, etc.) then you could compare the two file sizes. If there is 'lots' of compression, then it's not particularly random. If there isn't much of a change in size, then it's 'pretty random'.

Why this works

The compression algorithms look for patterns (repetition and otherwise) and reduces that in some way. One way to look a these compression algorithms is a measure of the amount of information in a file. A highly compressed file has little information (e.g. randomness) and a little-compressed file has much information (randomness)