Disassembling write(1,\"hi\",3) on linux, built with gcc -s -nostdlib -nostartfiles -O3 results in:

ba03000000 mov edx, 3 ; thanks for the correction jester!

bf01000000 mov edi, 1

31c0 xor eax, eax

e9d8ffffff jmp loc.imp.write

I\'m not into compiler development but since every value moved into these registers are constant and known compile-time, I\'m curious why doesn\'t gcc uses dl, dil, and al instead.

Some may argue that this feature won\'t make any difference in performance but there\'s a big difference in executable size between mov $1, %rax => b801000000 and mov $1, %al => b001 when we are talking about thousands of register accesses in a program. Not only small size if part of a software\'s elegance, it does have effect on performance.

Can someone explain why did \"GCC decide\" that it doesn\'t matter?

Partial registers entail a performance penalty on many x86 processors because they are renamed into different physical registers from their whole counterpart when written. (For more about register renaming enabling out-of-order execution, see this Q&A).

But when an instruction reads the whole register, the CPU has to detect the fact that it doesn\'t have the correct architectural register value available in a single physical register. (This happens in the issue/rename stage, as the CPU prepares to send the uop into the out-of-order scheduler.)

It\'s called a partial register stall. Agner Fog\'s microarchitecture manual explains it pretty well:

6.8 Partial register stalls (PPro/PII/PIII and early Pentium-M)

Partial register stall is a problem that occurs when we write to part of a 32-bit register and later read from the whole register or a bigger part of it.

Example:

; Example 6.10a. Partial register stall

mov al, byte ptr [mem8]

mov ebx, eax ; Partial register stall

This gives a delay of 5 - 6 clocks. The reason is that a temporary register has been

assigned to AL to make it independent of AH. The execution unit has to wait until the write to AL has retired before it is possible to combine the value from AL with the value of the rest of

EAX.

Behaviour in different CPUs:

- Intel early P6 family: see above: stall for 5-6 clocks until the partial writes retire.

- Intel Pentium-M (model D) / Core2 / Nehalem: stall for 2-3 cycles while inserting a merging uop. (see this Q&A for a microbenchmark writing AX and reading EAX with or without xor-zeroing first)

- Intel Sandybridge: insert a merging uop for low8/low16 (AL/AX) without stalling, or for AH/BH/CH/DH while stalling for 1 cycle.

- Intel IvyBridge (maybe), but definitely Haswell / Skylake: AL/AX aren\'t renamed, but AH still is:

How exactly do partial registers on Haswell/Skylake perform? Writing AL seems to have a false dependency on RAX, and AH is inconsistent.

All other x86 CPUs: Intel Pentium4, Atom / Silvermont / Knight\'s Landing. All AMD (and Via, etc):

Partial registers are never renamed. Writing a partial register merges into the full register, making the write depend on the old value of the full register as an input.

Without partial-register renaming, the input dependency for the write is a false dependency if you never read the full register. This limits instruction-level parallelism because reusing an 8 or 16-bit register for something else is not actually independent from the CPU\'s point of view (16-bit code can access 32-bit registers, so it has to maintain correct values in the upper halves). And also, it makes AL and AH not independent. When Intel designed P6-family (PPro released in 1993), 16-bit code was still common, so partial-register renaming was an important feature to make existing machine code run faster. (In practice, many binaries don\'t get recompiled for new CPUs.)

That\'s why compilers mostly avoid writing partial registers. They use movzx / movsx whenever possible to zero- or sign-extend narrow values to a full register to avoid partial-register false dependencies (AMD) or stalls (Intel P6-family). Thus most modern machine code doesn\'t benefit much from partial-register renaming, which is why recent Intel CPUs are simplifying their partial-register renaming logic.

As @BeeOnRope\'s answer points out, compilers still read partial registers, because that\'s not a problem. (Reading AH/BH/CH/DH can add an extra cycle of latency on Haswell/Skylake, though, see the earlier link about partial registers on recent members of Sandybridge-family.)

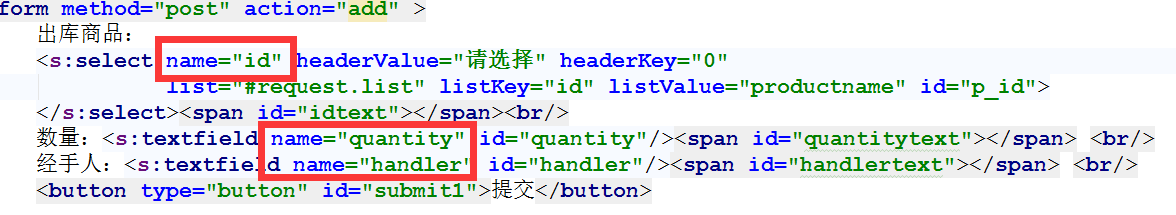

Also note that write takes arguments that, for an x86-64 typically configured GCC, need whole 32-bit and 64-bit registers so it couldn\'t simply be assembled into mov dl, 3. The size is determined by the type of the data, not the value of the data.

Finally, in certain contexts, C has default argument promotions to be aware of, though this is not the case.

Actually, as RossRidge pointed out, the call was probably made without a visible prototype.

Your disassembly is misleading, as @Jester pointed out.

For example mov rdx, 3 is actually mov edx, 3, although both have the same effect—that is, to put 3 in the whole rdx.

This is true because an immediately value of 3 doesn\'t require sign-extension and a MOV r32, imm32 implicitly clears the upper 32 bits of the register.

In fact, gcc very often uses partial registers. If you look generated code, you\'ll find lots of cases where partial registers are used.

The short answer for your particular case, is because gcc always sign or zero-extends arguments to 32-bits when calling a C ABI function.

The de-facto SysV x86 and x86-64 ABI adopted by gcc and clang requires that parameters smaller than 32-bits are zero or sign-extended to 32-bits. Interestingly, they don\'t need to be extended all the way to 64-bit.

So for a function like the following on a 64-bit platform SysV ABI platform:

void foo(short s) {

...

}

... the argument s is passed in rdi and the bits of s will be as follows (but see my caveat below regarding icc):

bits 0-31: SSSSSSSS SSSSSSSS SPPPPPPP PPPPPPPP

bits 32-63: XXXXXXXX XXXXXXXX XXXXXXXX XXXXXXXX

where:

P: the bottom 15 bits of the value of `s`

S: the sign bit of `s` (extended into bits 16-31)

X: arbitrary garbage

The code for foo can depend on the S and P bits, but not on the X bits, which may be anything.

Similarly, for foo_unsigned(unsigned short u), you\'d have 0 in bits 16-31, but it would otherwise be identical.

Note that I said defacto - because it actually isn\'t really documented what to do for smaller return types, but you can see Peter\'s answer here for details. I also asked a related question here.

After some further testing, I concluded that icc actually breaks this defacto standard. gcc and clang seem to adhere to it, but gcc only in a conservative way: when calling a function, it does zero/sign-extend arguments to 32-bits, but in its function implementations in doesn\'t depend on the caller doing it. clang implements functions that depend on the caller extending the parameters to 32-bits. So in fact clang and icc are mutually incompatible even for plain C functions if they have any parameters smaller than int.

Note that using -O3 explicitly asks compiler to aggressively favor performance over code size. Use -Os size if you are not ready to sacrifice around 20% of size.

On something like the original IBM PC, if AH was known to contain 0 and it was necessary to load AX with a value like 0x34, using \"MOV AL,34h\" would generally take 8 cycles rather than the 12 required for \"MOV AX,0034h\"--a pretty big speed improvement (either instruction could execute in 2 cycles if pre-fetched, but in practice the 8088 spends most of its time waiting for instructions to be fetched at a cost of four cycles per byte). On the processors used in today\'s general-purpose computers, however, the time required to fetch code is generally not a significant factor in overall execution speed, and code size is normally not a particular concern.

Further, processor vendors try to maximize the performance of the kinds of code people are likely to run, and 8-bit load instructions aren\'t likely to be used nearly as often nowadays as 32-bit load instructions. Processor cores often include logic to execute multiple 32-bit or 64-bit instructions simultaneously, but may not include logic to execute an 8-bit operation simultaneously with anything else. Consequently, while using 8-bit operations on the 8088 when possible was a useful optimization on the 8088, it can actually be a significant performance drain on newer processors.